Building Resilient Infrastructure with AWS and Kubernetes

Downtime kills momentum. One glitch in your app’s backend and users are gone, revenue drops, and trust erodes. In today’s world, where users expect 99.99% uptime and instant response times, resilience is not a luxury—it’s survival.

As businesses modernize their stack and move towards microservices, distributed systems, and containerized deployments, traditional ways of thinking about infrastructure simply don’t cut it. You need an architecture that not only scales—but heals, adapts, and recovers automatically.

This is where AWS and Kubernetes come together as a powerful combo. Kubernetes brings self-healing, intelligent orchestration, and service-level resilience. AWS offers a battle-tested, scalable backbone with services that span compute, storage, networking, and monitoring—across regions, zones, and edge locations.

In this blog, we’ll break down how to build a resilient cloud-native infrastructure using AWS and Kubernetes. You’ll learn:

Let’s dive into the future-proof way of building apps that survive chaos—and thrive in it

Understanding Resilience in Cloud Infrastructure

Resilience isn’t just about keeping your app online—it’s about how quickly and gracefully your system bounces back when something breaks. Whether it’s a traffic spike, a failed server, or a misbehaving deployment, resilient infrastructure ensures users don’t feel the impact.

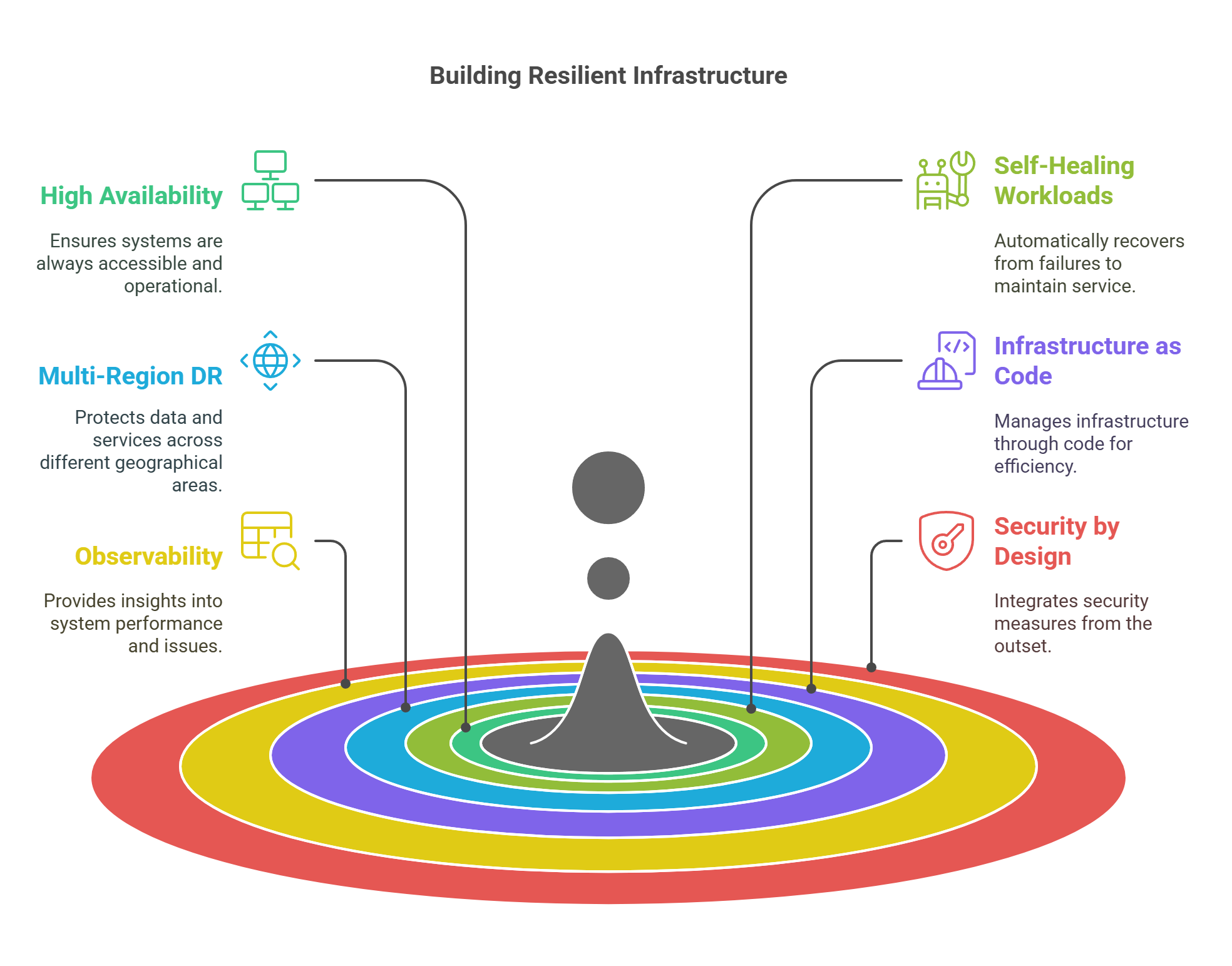

What is Resilience in the Cloud?

At its core, resilience is the system’s ability to recover from disruptions and continue functioning—without manual intervention.

It’s not the same as just having backups or monitoring. Resilient systems are designed to expect failure and recover from it as a normal part of operation.

Core Traits of Resilient Infrastructure

These five pillars form the foundation of cloud resilience:

Why It Matters

Without resilience, you’re always one issue away from a service outage, SLA violation, or major customer churn. And in a competitive landscape, that can be a death sentence.

Modern cloud-native apps must assume failure—and be architected to handle it without breaking user experience.

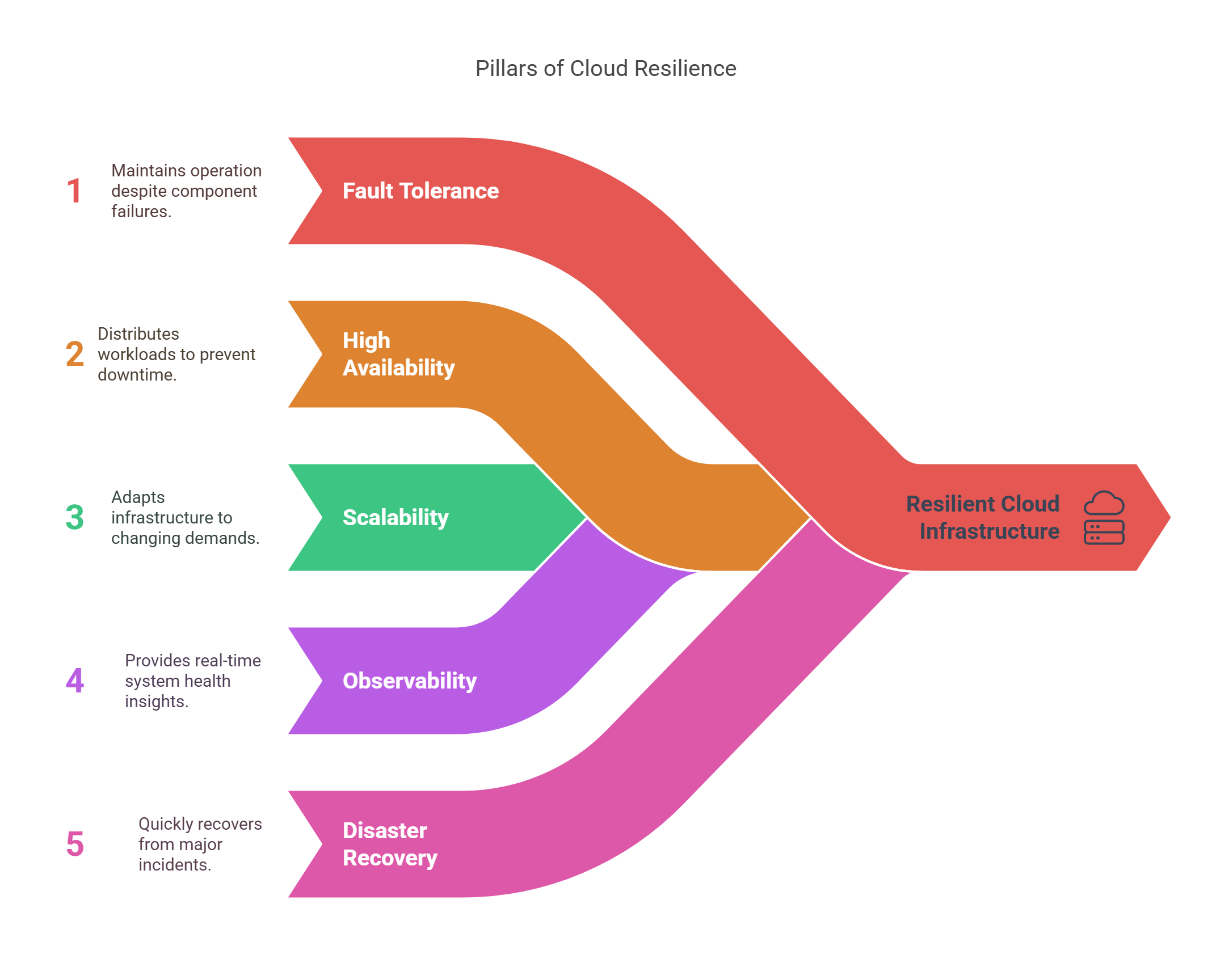

Why AWS + Kubernetes Is a Resilience Power Combo

Individually, AWS and Kubernetes are powerful. Together, they make building resilient infrastructure easier, faster, and more scalable than ever.

Kubernetes: Built for Self-Healing

Kubernetes is designed to expect failure. It automatically manages container lifecycles, restarts crashed pods, reroutes traffic, and maintains desired state with almost no manual effort.

Key resilience features:

AWS: Infrastructure That’s Global, Elastic, and Secure

AWS gives Kubernetes a reliable, enterprise-grade foundation to run on. It handles the infrastructure—so you focus on apps.

Key AWS resilience boosters:

The Real Power: Integration

When you run Kubernetes on AWS (via EKS), you unlock seamless integrations:

Summary

Kubernetes handles what runs, AWS handles where it runs. You get built-in redundancy, faster recovery, and automated scaling with minimal manual touch.

If you’re aiming for uptime, elasticity, and peace of mind—this duo is the way to go.

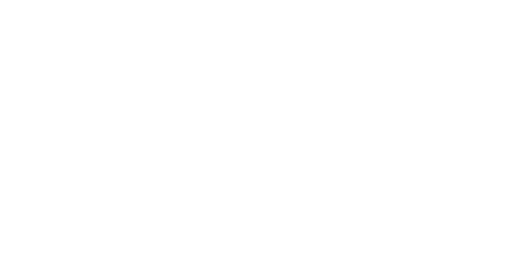

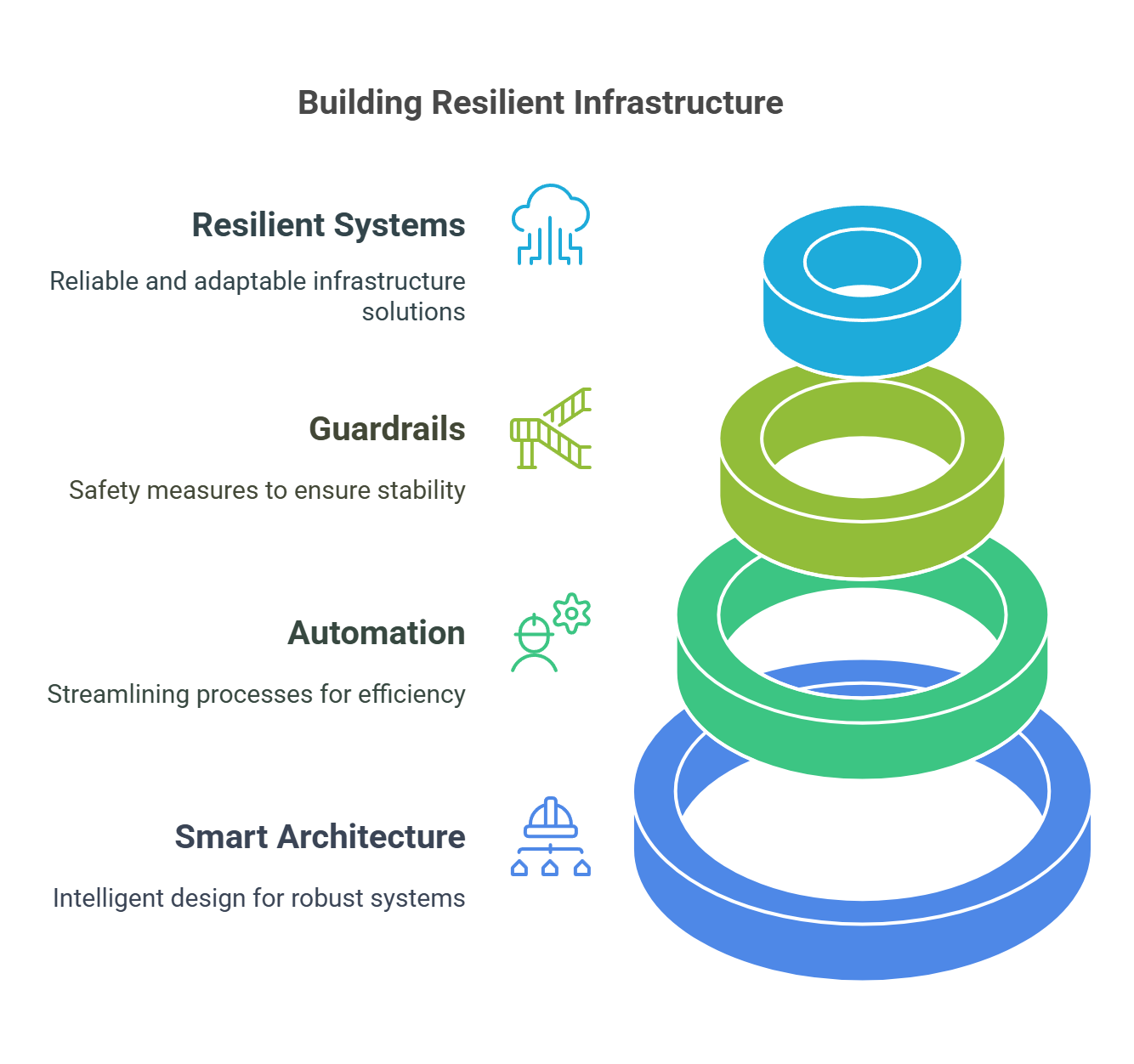

Core Building Blocks for Resilient Infrastructure

Resilient infrastructure doesn’t happen by accident. It’s built by combining smart architecture choices, automation, and guardrails across every layer—from nodes to workloads. Below are the key components you need when designing resilient systems with AWS and Kubernetes.

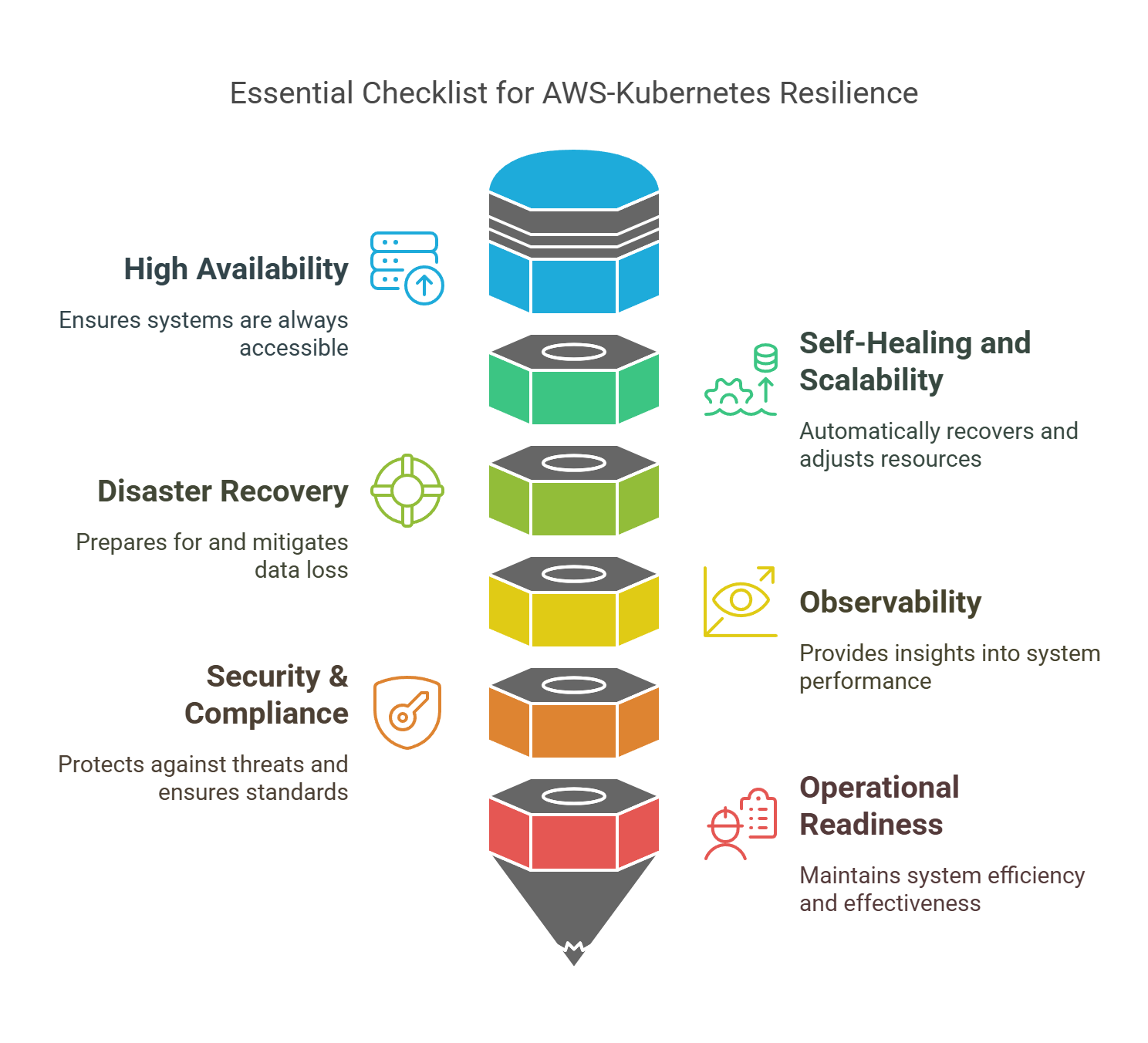

1. High Availability (HA) Cluster Design

Goal: Eliminate single points of failure.

Think of it as making sure no one failure can take down your entire system.

2. Self-Healing Workloads

Goal: Auto-detect and recover from failures.

Kubernetes + AWS = no more 2 a.m. pages for basic restarts.

3. Multi-Region and Disaster Recovery (DR)

Goal: Protect against large-scale outages.

Regional isolation ensures your business doesn’t stop if one part of the cloud does.

4. Infrastructure as Code (IaC) + GitOps

Goal: Make environments reproducible and auditable.

If it can’t be version-controlled or recreated, it’s a liability.

5. Observability and Monitoring

Goal: Get real-time visibility and act fast.

Observability is how you detect chaos before users do.

6. Security and Compliance by Design

Goal: Build trust, protect data, and stay audit-ready.

Resilience isn’t complete without security baked in from the start.

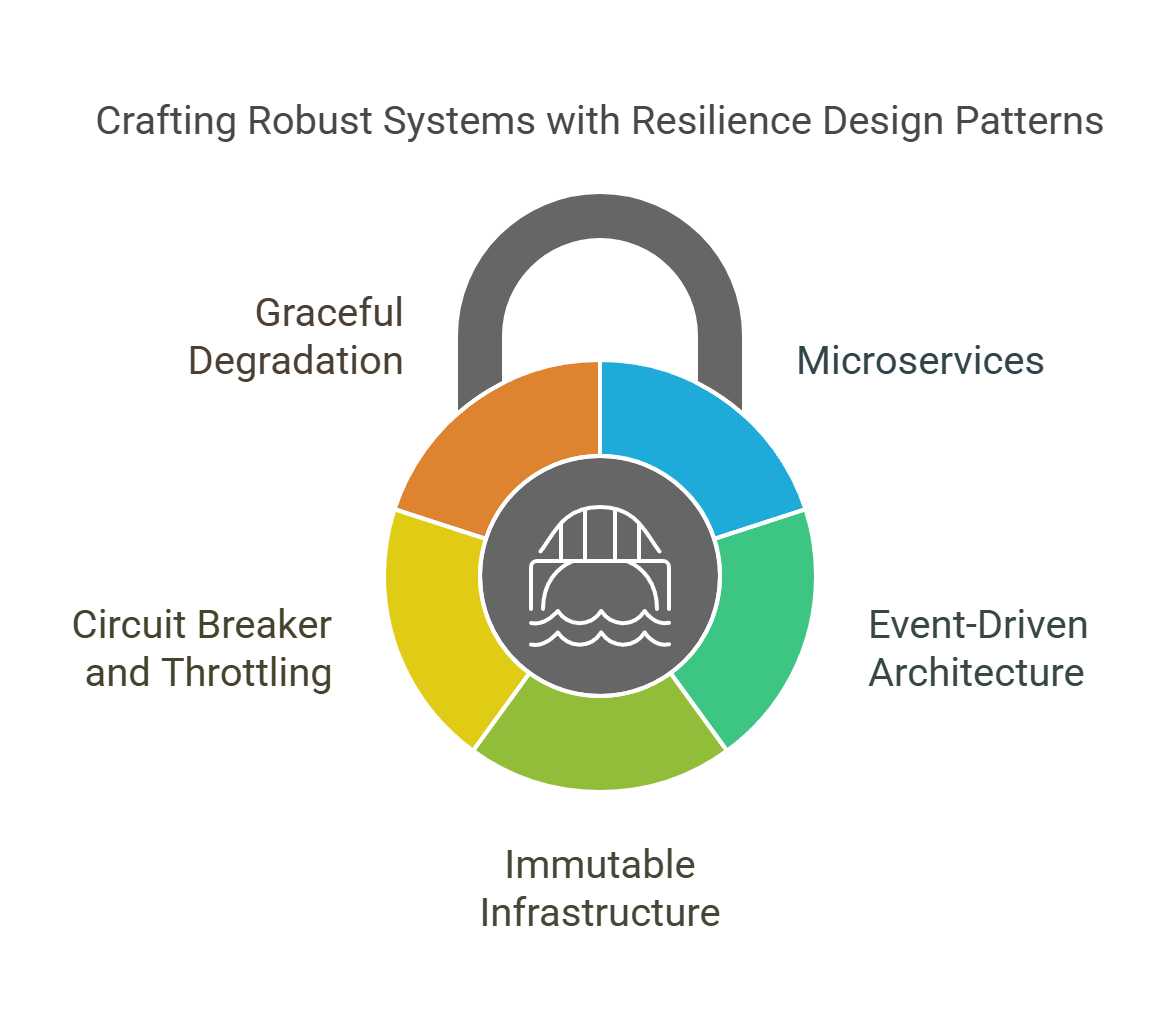

Resilience Design Patterns

Building resilient infrastructure is not just about tools—it’s about patterns. These are tried-and-tested architectural strategies that help your systems recover, reroute, and stay online—even when chaos hits.

Let’s break down the top resilience design patterns using AWS and Kubernetes:

These design patterns aren’t just technical—they’re how high-growth teams avoid downtime, keep users happy, and scale without fear.

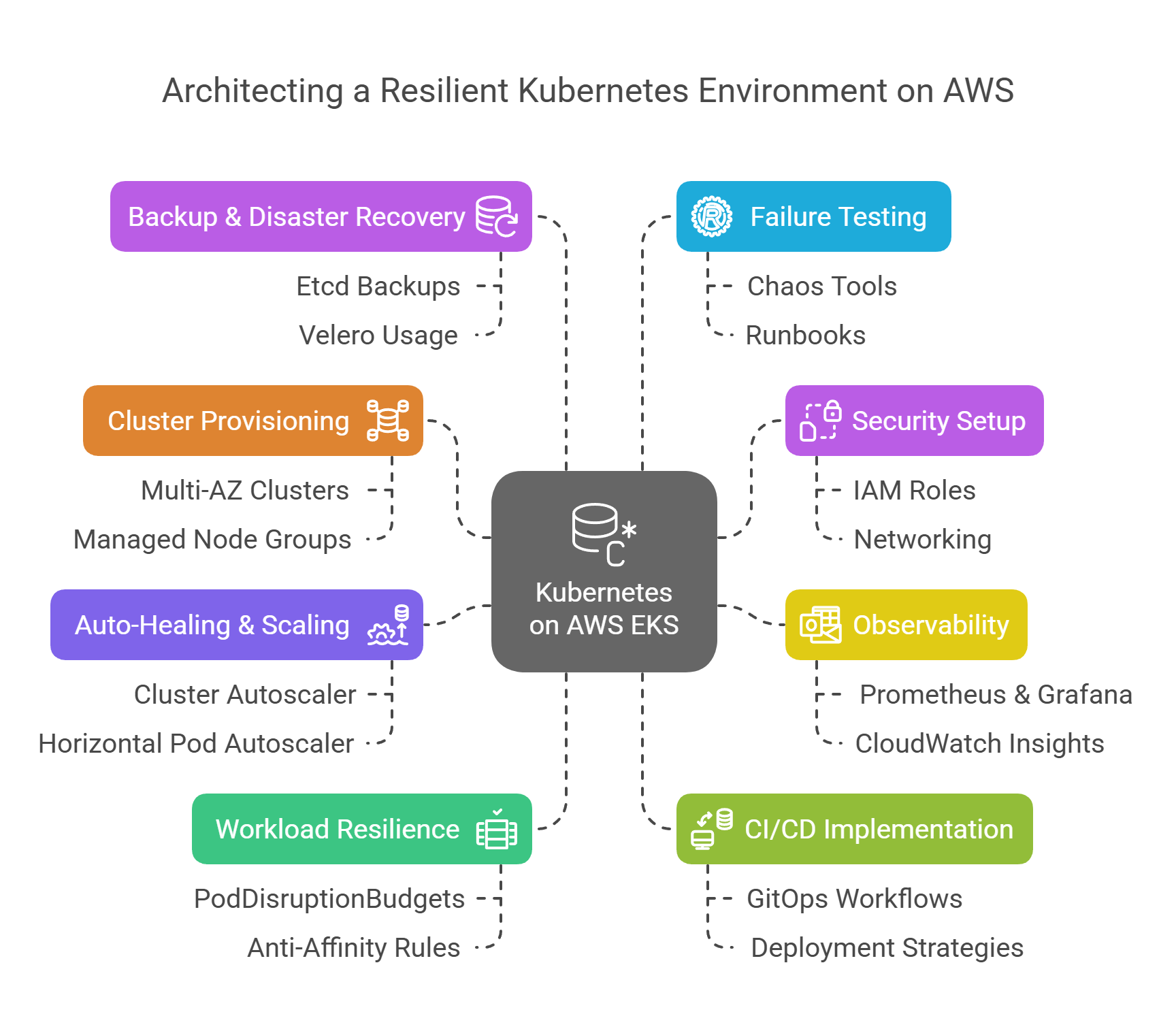

Step-by-Step Guide to Building Resilient Infrastructure on AWS + Kubernetes

You’ve got the tools. You know the patterns. Now let’s put it all together. This guide walks you through the key steps to architect and deploy a resilient, scalable, and secure Kubernetes environment on AWS using EKS.

Real-World Case Studies

Let’s break theory with some proof. These companies use AWS and Kubernetes not just for performance—but for resilience, availability, and real-time recovery. Here’s how they do it.

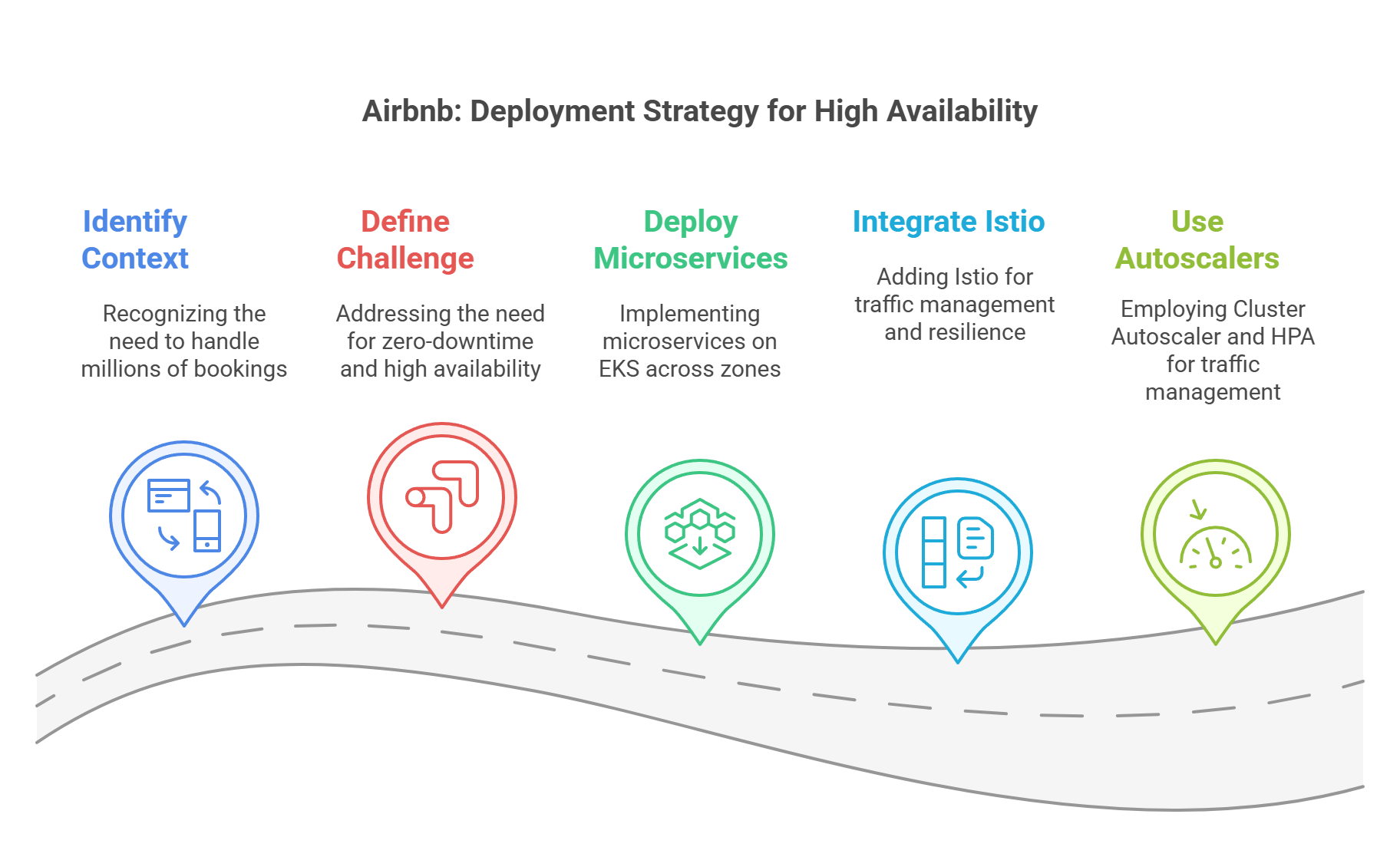

🏨 Airbnb: Scaling Through Chaos

Context: Handles millions of bookings, high traffic during holidays/events.

Challenge: Needed zero-downtime deployments and high availability across global regions.

Solution:

- Deployed microservices on EKS across multiple Availability Zones.

- Integrated Istio service mesh for traffic shifting, retries, and circuit breakers.

- Used Cluster Autoscaler and HPA to handle unpredictable traffic bursts.

Outcome:

99.99% uptime, fast recoveries from node failures, and safe progressive deployments even during peak events.

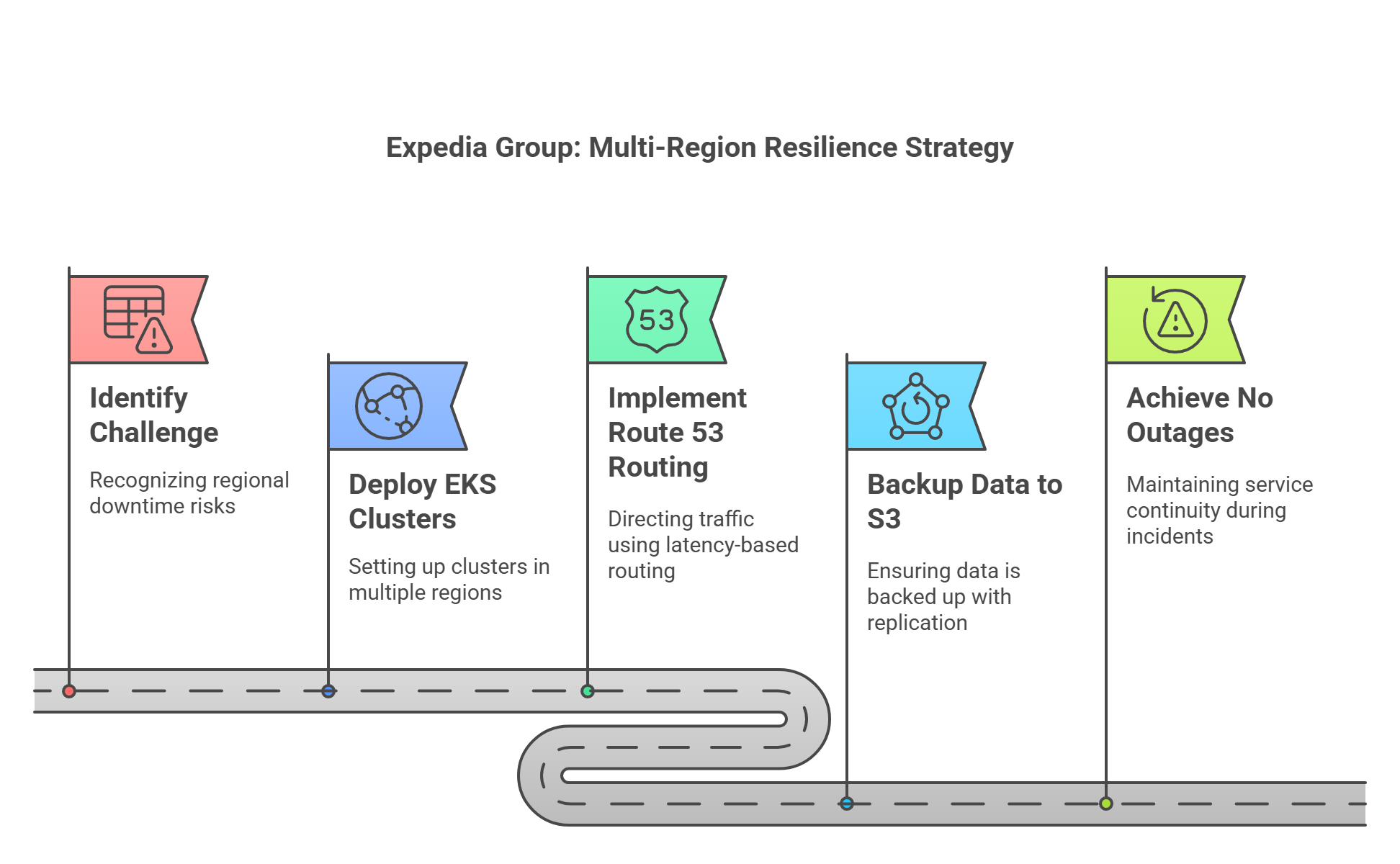

✈️ Expedia Group: Multi-Region Resilience

Context: Mission-critical travel search and booking platform.

Challenge: One region going down = millions in lost revenue.

Solution:

- Ran multiple EKS clusters in separate AWS regions.

- Used Route 53 latency-based routing to direct traffic to nearest healthy region.

- Backed up persistent data to S3 with Cross-Region Replication.

Outcome:

No customer-facing outages even during regional incidents. Real-time failover achieved.

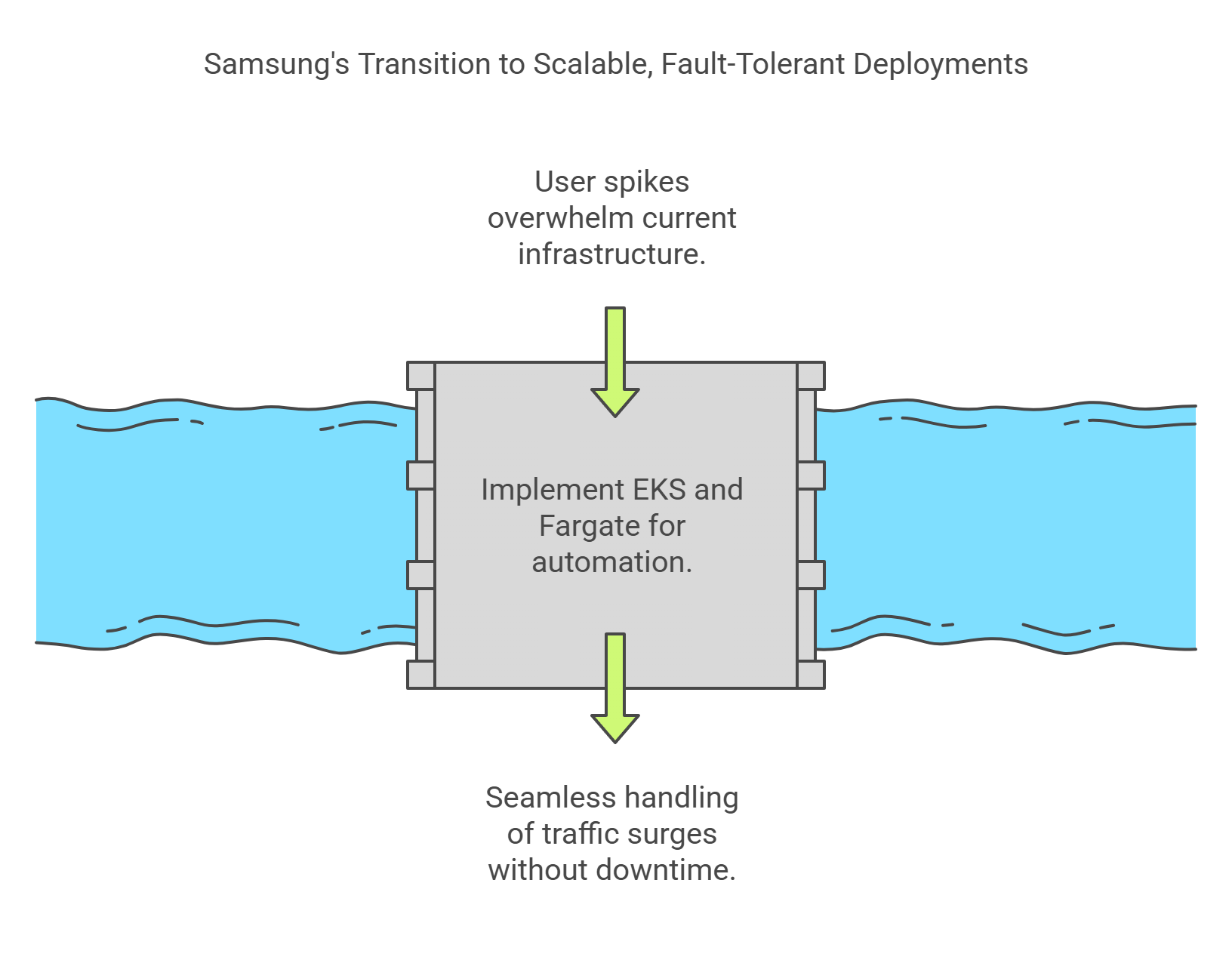

📱Samsung: Product Launch Stability

Context: Global product drops bring massive, short-term user spikes.

Challenge: Needed automatic scaling and fault-tolerant deployments.

Solution:

- Combined EKS + Fargate to run containerized apps without managing infrastructure.

- Configured PodDisruptionBudgets to maintain app availability during node scaling.

- Used CloudWatch dashboards + Prometheus for visibility.

Outcome:

Handled 3x normal traffic during Galaxy launches without performance issues.

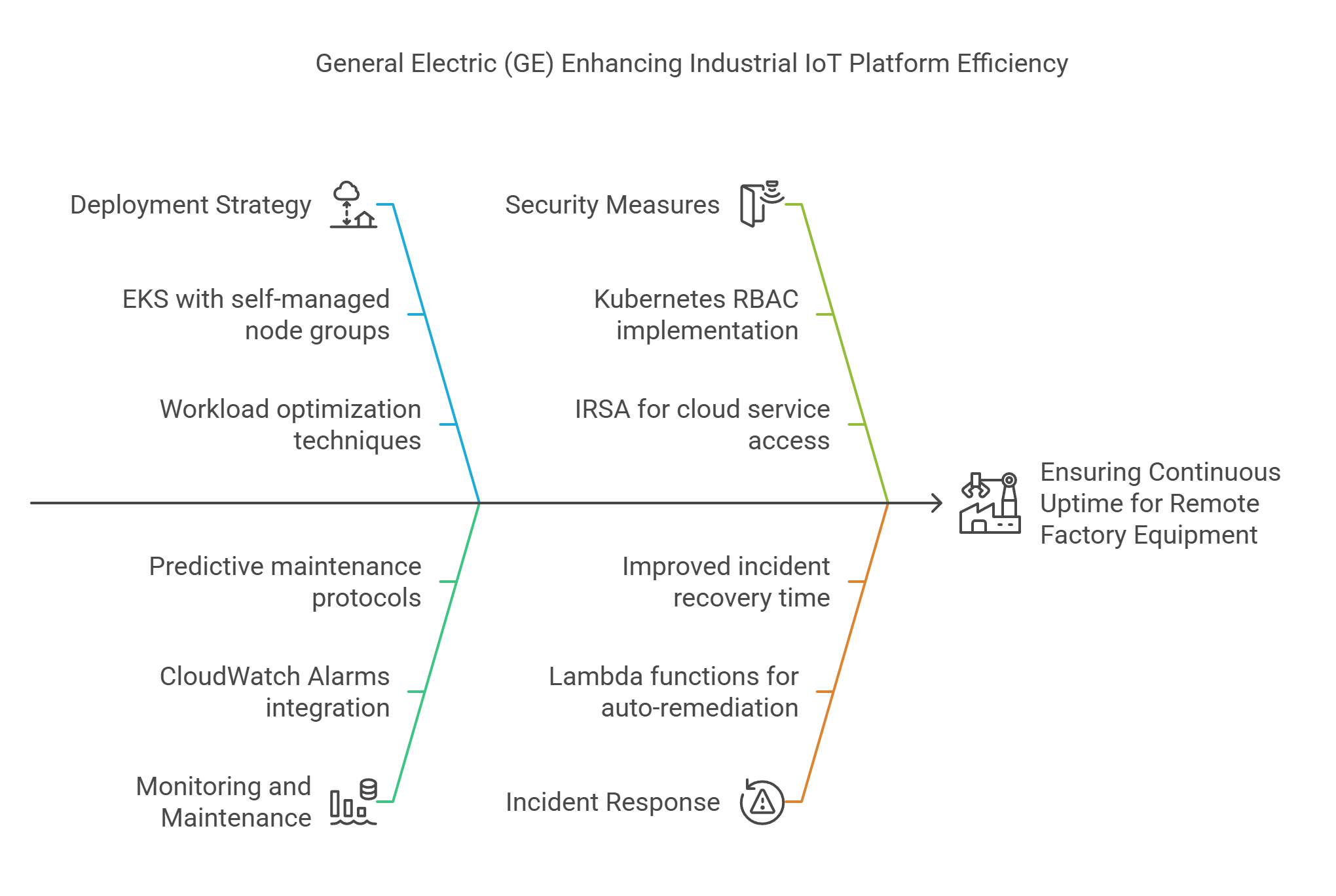

⚙️ General Electric (GE): Industrial IoT Uptime

Context: IoT platform managing critical industrial equipment across factories.

Challenge: Needed real-time monitoring, predictive maintenance, and zero downtime.

Solution:

- Deployed workloads on EKS with self-managed node groups.

- Integrated CloudWatch Alarms and Lambda functions for auto-remediation.

- Applied Kubernetes RBAC and IRSA for secure, role-based access to cloud services.

Outcome:

Improved incident recovery time by 40%, ensured continuous uptime for remote factory equipment.

These stories show that resilience is not just a best practice—it’s a competitive advantage. The right mix of AWS services and Kubernetes features can help any business stay online, scale fast, and recover instantly.

Measuring Resilience: Metrics That Matter

You can’t improve what you don’t measure. To truly build and maintain resilient infrastructure, you need the right set of metrics—ones that tell you how well your system recovers, adapts, and performs under stress.

Here are the metrics that actually matter when it comes to resilience:

🧠 Bonus: Use SLOs + SLIs

Set Service Level Objectives (SLOs) and monitor Service Level Indicators (SLIs) for core services—e.g., 99.95% uptime for API, < 1s response time, < 1% error rate.

By continuously measuring these indicators, you’re not just putting out fires—you’re building fireproof systems.

Checklist: AWS + Kubernetes Resilience Best Practices

Use this checklist to audit or guide your infrastructure setup. These are the essentials that keep your Kubernetes workloads resilient on AWS.

Keep this checklist close-review it regularly as your infra evolves.

Accelerate Resilience with CloudJournee

Building resilient infrastructure isn’t just about having the right tools—it’s about using them the right way. And that’s where we come in.

At CloudJournee, we help businesses like yours design, deploy, and scale secure, resilient, and high-performing Kubernetes infrastructure on AWS.

Whether you’re:

- Launching your first EKS cluster,

- Migrating monoliths to microservices,

- Automating failovers and backups,

- Or stress-testing your system for failure readiness,

—we’ve got you covered.

🚀 What We Offer:

🎯 Ready to Build Resilience Into Your Infrastructure?

Start with a Free Resilience Assessment where we’ll:

✅ Identify single points of failure

✅ Review your scaling and recovery strategies

✅ Recommend quick wins and long-term improvements

Let’s make sure your systems stay online—no matter what.